Google Manual Action Penalties & How To Recover [Case Study]

Evoluted recently assisted a client hit with a manual action - a penalty applied by Google to websites they believe to be breaking their guidelines.

Operating in the competitive health and fitness space, the client employs an in-house team managing content and link-building, relying on Evoluted to provide technical and on-site SEO support.

Naturally, we were concerned about the potential for long-term damage to the ongoing success the site had seen in organic search; and also confused at the cause for the penalty as the client had historically earned links through ethical, white-hat activities.

.gif)

In this article, we’ll cover the causes and potential damage from manual actions, as well as guiding you through the recovery process we implemented to help our client revoke their manual action penalty.

WHAT IS A MANUAL ACTION?

Manual actions are Google’s method of penalising, demoting or sometimes entirely removing websites from their search index if they’re found to be breaking the Google Webmaster Guidelines. The ‘manual’ part comes from the action being applied by a human reviewer, unlike standard algorithmic penalties.

Google employs thousands of human reviewers (quality raters) who review websites against a lengthy quality rating document as well as the Webmaster Guidelines. Sites which receive manual actions often reach these reviewers by way of algorithmic detection or submission of spam reports.

Google has entire teams (like the quality raters) dedicated to fighting spam and the manipulation of search, and applying manual actions are one component of this ongoing battle.

If you receive a manual action, you should take it very seriously. Some sites may see very little impact to their rankings and traffic, just enough to scare them into compliance with the guidelines. Others may see huge drops in traffic and rankings plummeting, often to an extent that the site will never recover from. In the most serious cases, entire sites may be completely removed from the index, impossible to find even when searching for their absolute URLs.

WHY DO GOOGLE IMPLEMENT MANUAL ACTIONS?

We’ve come a long way from the ‘wild-west’ days of early search engines, but as long as search remains such a huge part of our online existence there will be people who try to manipulate their way to the top of the results.

Google have been fairly open about their approaches (for once!), highlighting that they typically attempt to resolve spam through improvements to their algorithm. This creates a ‘blanket’ penalty against sites using illegitimate techniques to rank well rather than any singular site.

.gif)

However, under severe circumstances where the webspam team feel they can’t solve it algorithmically, manual actions are implemented. A quote from Google’s John Mueller during one of their periodical Webmaster Hangouts confirms this

“…from a manual [action] point of view we try to take action when we realize that we can’t solve it algorithmically. When something is really causing a problem… that’s some place where the manual webspam team might step in and say we need to take action here.”

John goes on to describe how manual actions are “something which we try to do fairly rarely and usually really in kind of the extreme cases” further highlighting how surprised we were to see our client receive such an extreme slap on the wrist.

WHEN MIGHT A MANUAL ACTION BE GIVEN?

The Webmaster Help Centre page on manual actions details 12 different types of manual action that may be applied to a site:

User-generated Spam - An issue for sites with forums, open blog commenting or user profiles, whereby site-users are creating spam usernames, comments or links.

Spammy Free Host - For sites which offer free-hosting services, when a high percentage of hosted sites appear spammy, manual action may be applied to the host rather than the individual entities.

Structured Data Issue - Applied when sites implement manipulative structured data, markup content invisible to users or break the structured data guidelines provided.

Unnatural Inbound Links - A common manual action applied when aggressive link-building tactics, such as link-buying or link schemes, have been implemented in an attempt to manipulate the search algorithm.

Unnatural Outbound Links - Applied to sites which have a suspicious volume of outbound links to other sites or manipulative anchor text, indicating the site may be part of a link-buying network or link scheme.

Thin Content With Little Or No Value - Given to sites with lots of pages which offer no value to a user, such as those with scraped content, lots of affiliate links, or auto-generated content.

Cloaking / Sneaky Redirects - Given to sites which either redirect or show different content to users than they are presenting to Google’s crawlers.

Pure Spam - Given to the worst of the worst, sites with huge volumes of scraped or stolen content, gibberish content or repeated violations of Google’s guidelines.

Cloaked Images - Similar to cloaking content, this is applied to sites who show different images to users than they do to crawlers, or who purposely obscure images once a user reaches the site.

Hidden Text / Keyword Stuffing - Applied to sites who attempt to manipulate search algorithms by unnaturally stuffing keywords into their page, or who attempt to add additional content to the page for crawlers, but hide it from users through visual styling.

AMP Content Mismatch - For sites whose Accelerated Mobile Pages (AMP) are dramatically different to the content provided on the original (canonical) page.

Sneaky Mobile Redirects - Applied to sites who implement redirects for users visiting mobile sites to unrelated URLs, rather than in a beneficial way such as redirecting to the mobile version of a site.

When a site is found to be violating one or more of these Webmaster Guidelines, it doesn’t necessarily mean a manual action will be instantly applied. In some cases the webmaster may receive warnings via Google Search Console highlighting errors.

Additionally, Google have stated that manual action application is a peer-reviewed process, enabling other team members to provide a sanity-check as to whether a manual action is warranted.

Again, this helps to highlight just how wrong something must be on a site before a manual action is given.

HOW DO YOU FIX A MANUAL ACTION PENALTY?

Recovery from a manual action penalty is, in many cases, easily achieved by rectifying the violation in question and for some webmasters the process of simply undoing an honest mistake is enough.

On their Help Centre page, the Google webspam team provide detailed recommended actions to take for each possible manual action penalty. In some cases, this covers what to do if you have unintentionally violated guidelines as well as what to do when you have done so knowingly.

Each recovery process is a little different and some are more complicated than others, potentially requiring the assistance of someone experienced with SEO and manual action removal.

However, all of them ultimately end with reaching out to the webspam team via Search Console with a Reconsideration Request explaining the quality issue on your site which caused the manual action, describing the steps you’ve taken to fix the issue, and documenting the outcome of your efforts.

CASE STUDY: RECOVERING FROM AN UNNATURAL INBOUND LINKS MANUAL ACTION

As we’ve just discussed, manual actions can come in many forms however the one we’ll be focussing on was specifically given for ‘Unnatural inbound links’ after Google detected ‘a pattern of unnatural, artificial, deceptive, or manipulative links’ pointing at our client’s site.

We’ll run through the process we followed immediately after receiving notification of the manual action in a timeline format, enabling the client to have the manual action go from applied to revoked in just over 1 month.

DAY 1 (5TH AUGUST 2019)

As with most bad news, our notification of the manual action came at a bad time - around midday on a Sunday - and wasn’t picked up until Monday morning, at which time we swiftly informed the client and requested they stop any inbound link building activities until we’d conducted an analysis.

Google will often inform you whether the issue is with a particular area of your site, i.e. website.com/directory or the whole domain. In this case, the whole domain had been affected, so we knew everything had to be analysed.

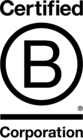

Following the recommendations provided, we began collating a list of all links to the client’s site. They had been earning links for many years, so with the manual action coming in August, we made the decision to only analyse links from 2019 under the assumption that older links would have already triggered a penalty if they were deemed unnatural.

Google recommends using their ‘Top Linking Sites’ report within Search Console, however:

It is limited to 1000 rows;

It doesn’t state when the links were first identified.

Instead, we combined their report with the Ahrefs Referring Domains report which enabled us to choose backlinks which were ‘First Seen’ at any point during 2019. Using Ahrefs also immediately identified a probable time period that the unnatural links were acquired during.

Exporting both a list of referring domains and individual backlinks we also quickly identified a series of patterns in recent links to the client’s site.

Alongside a series of very low domain rating sites and obvious spam domains we also spotted a series of domains in the 10-50 domain rating range which were obvious ‘mom blogger’ sites.

.gif)

Experience has shown that many of these sites take payment for articles and links on their sites, providing a good place to start our investigation.

DAY 2 - 4

Armed with our spreadsheet, Ahrefs reports and a boatload of caffeine, we began filtering through the 1014 referring domains which contributed a combined 7585 backlinks to the client’s site.

Thanks to the patterns we had identified the day before, we managed to throw a few filters onto the data to ease this process.

We started by filtering for domains not using TLDs we’d usually expect to see (.com/.co.uk/.org/.ac.uk/etc.) combined with Ahrefs domain rating estimates we quickly managed to check off large volumes of links from low value domains - the kind of chaff that any site naturally picks up after being available on the web for a while.

We then also filtered for the usual suspects that we knew could probably be ignored, like local news directories and the like, leaving us with a solid ~750 domains left to analyse. Not what I would usually look forward to on a Tuesday afternoon, but a lot better than we had before!

Over the following few days, we then followed the arduous process of manually reviewing each site, and sometimes each individual link from a site, for quality. Using criteria such as anchor text, relevancy to the site and content the link was included in, and where else the site linked out to; we eventually removed all of the organically acquired and earned links, finishing with a solid list of suspects to interrogate further.

Some of these had been very obviously acquired through outdated methods - profiles, forums, blog comments, etc. - however the key result was 99.9% confirmation of our suspicion of link-buying.

In fact, over 125 of the linking domains were parent blogger sites, clearly marking posts featuring our client’s links as ‘collaborative posts’, ‘sponsorships’, etc.

DAY 5

With our list of potentially bought-links we approached the client to discuss our findings and make sure we were absolutely correct with our assumptions.

Turns out we were right on the money, and the client had been lured in by two fairly well-known, UK-based link-building companies to essentially buy links.

.gif)

These third-parties had then connected to their network of bloggers and paid for each placement of the client’s link, often included in articles featuring similar companies and related products.

DAY 6 - 18

Confident that we had found the root cause of the manual action, the next couple of weeks consisted of reversing the damage.

Following Google’s recommendations, we first attempted to get all suspicious links marked with ‘nofollow’, indicating to Google’s crawlers that we did not want attention paid to them. To achieve this, we coordinated with the third-parties via the client, forcing them to fix the problem they had caused, in turn saving us a lot of time and outreach.

For the other links we had identified as negatively impacting the client’s backlink profile, we conducted outreach requesting a similar rel=”nofollow” to be added to links, or in some cases, complete removal of the offending article.

Finally, as advised in Google’s final recommendation we utilised the disavow tool to disavow suspected links which we could not get removed any other way.

It’s important to note that the disavow tool is a last resort option, and shouldn’t be used without proper knowledge of the impact. The tool essentially tells Google, “we do not wish our site to be associated with these links or domains. Ever.”

It’s also key that you can’t just get away with disavowing all of the negative links, you must demonstrate reasonable effort to fix the problems causing the manual action. Google explicitly state:

If you can get a backlink removed, make a good-faith effort to remove the link first. Blindly adding all backlinks to the disavow file is not considered a good-faith effort, and will not be enough to make your reconsideration request successful.

For multiple links from the same domain to your site, use the "domain:" operator in the disavow file for convenience.

Make sure that you don't disavow organic links to your site.

Simply disavowing all backlinks without attempting to remove them might lead to rejection of your request.

At this point, we also chose to clean up a few of the client’s previously acquired ‘questionable’ spam links with the disavow tool.

DAY 19

Finally we had reached the point where we felt confident to request Google to reconsider the manual action and remove the client’s penalty.

Following their requirements to explain the quality issue on the site, describe the steps taken to fix the issue, and document the outcome of our efforts, we submitted a reconsideration request written from the perspective of the client which read as follows:

FAO Google Webmaster Team, [CLIENT NAME] received notification of a manual penalty on 4th August 2019 for “Unnatural links to your site”.

This penalty has been taken very seriously by the team at [CLIENT NAME] and our agency representatives.

After extensive investigatory work analysing links to our site shown both within Google Search Console and through third-party tools such as AHRefs, we have identified a series of links pointed at pages on [CLIENT SITE] which have been acquired as part of strategies that may be classified as unnatural, artificial, deceptive or manipulative of Google’s search algorithms. We take responsibility for building many of these links in-house, and via a third-party providing link-building services.

To remedy this issue, we have manually reviewed each and every link identified from GSC and AHRefs to identify links which are clearly, or may have the potential to be classified as, the offenders mentioned above. Where possible we have reached out to the Webmasters of sites with offender links requesting for them to update them with a “nofollow” directive, or in the case of profiles on low-value directories, to remove the entry entirely.

We have also liaised with the third-party, [NAME], requesting for them to approach each site on which they secured a link to [CLIENT SITE] to request the addition of a “nofollow” directive. They have done this for a list of X domains, of which the majority have added the directive or removed the backlink, however we are still waiting to hear back from 5 domains due to their Webmasters being on vacation.

Finally, where we could not remove links for various reasons, we have updated and submitted a new disavow file containing an additional X domains where links were ‘first seen’ during 2019 according to AHRefs and which we would agree are low quality, unnatural and potentially manipulative.

We have also received internal guidance and training from our agency representatives on the role of links within search algorithms and the value of natural, organic links earned through providing legitimate and valuable content to searchers. It is in [CLIENT NAME]’s greatest interest to continue providing high quality, relevant and authoritative content and be considered as a reliable source of advice and guidance on all aspects of [CLIENT INDUSTRY]. By continuing in this we align ourselves with Google’s Webmaster Guidelines and ethical optimisation for search.

We kindly request that the Google Webmaster Team reviews this request for reconsideration and removal of the manual penalty applied to [CLIENT NAME]. Further evidence of our changes and link review documentation can be provided on request.

We also had a detailed CSV prepared demonstrating the links we had reviewed and our verdicts on each as further evidence should the webspam team have requested to see it.

Observant readers will also notice a point in the reconsideration request mentioning guidance and training on ethical link-building practices. This consisted of us having a discussion and providing written guidance to the client’s content and link-building team on where they had gone wrong, what to avoid in the future, and how to move forwards in the best way possible.

DAY 32 (6TH SEPTEMBER 2019)

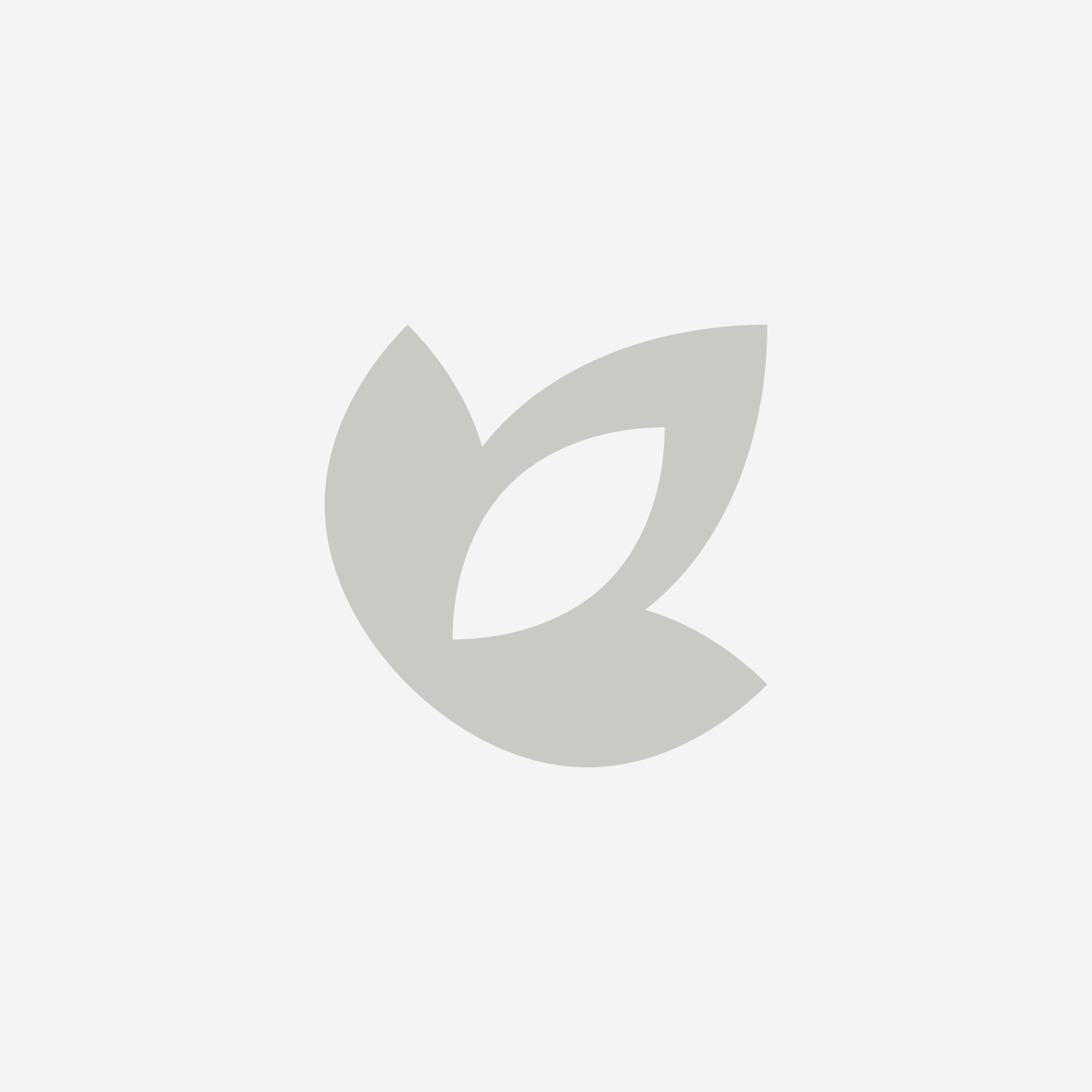

After waiting for a couple of weeks, we finally received a response via Google Search Console with the good news that our reconsideration request had been approved and the manual action revoked.

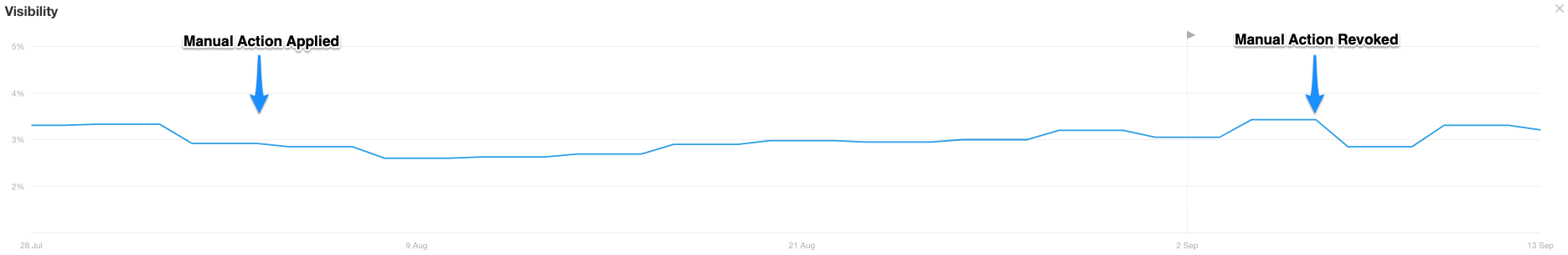

Reviewing the month in between receiving the manual action and getting it revoked, it turned out that Google had been fairly soft on our client’s site and we saw very little impact on their traffic volumes and SERP visibility.

Compared to what other webmasters and SEOs have reported seeing following manual actions, we were extremely lucky.

This may have been due in part to the speed at which we responded to the violation, or that during the peer review process to decide on the manual action, the webspam team decided the infraction wasn’t severe enough for more than a slap on the wrist.

IN SUMMARY

Overall, our swift response and quick identification of the issues leading to the manual action allowed us to deal with what could have been a black-mark against our client’s site for the foreseeable future.

However, there were also a series of important lessons to be learnt:

Utilise every tool at your disposal to analyse and identify the root causes of any manual actions which have been applied, reviewing work in reverse chronological order to rule out obvious non-issues and identify suspects.

Be thorough and follow the guidelines Google provide to assist with manual action reconsideration, for example making sure to make reasonable effort to add a rel=”nofollow” directive to offending backlinks before resorting to the disavow tool.

Make sure to be explicitly clear on how you have followed each recommendation within your reconsideration request, and be ready with documentation to provide as evidence of your actions.

Depending on the size of your site and the severity of the infraction, be prepared to dedicate a lot of time towards fixing a manual action as there’s often no shortcut around the work required. Even following what we outlined in our timeline, we still spent around 20 hours filtering through the client’s backlink profile.

To close, I want to reiterate how lucky we were that the impact from this manual action was so slight and, despite being rare, Google still come down like a tonne of bricks on sites who try to manipulate, trick, or otherwise deceive their search algorithms.

Also, please don’t buy links - you’re better than that...

If you require expert support with your next SEO or Digital PR campaign, check out our SEO and Digital PR services today.